This article explains how to use Learnosity's Data API to generate templates suitable for creating pencil-and-paper assessments for off-line examinations. It additionally covers sessions and responses so they can be used for automatic scoring and analytics.

Overview

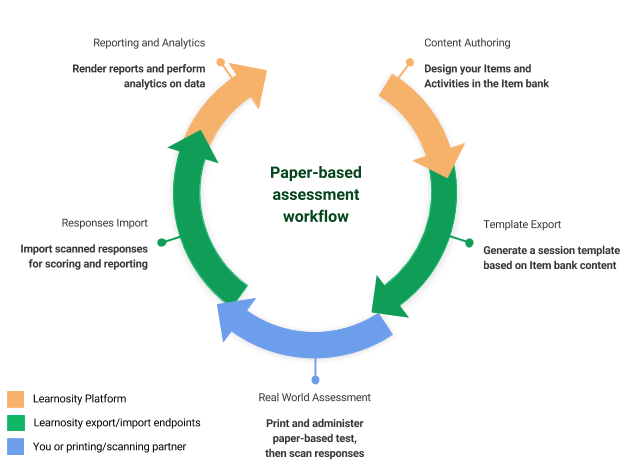

The full Learnosity stack allows you to design assessments, present them to students, and to capture and automatically score their responses, they report and analyse the data. However, there are cases where you may not want to use the full stack, for example to allow the students to take a traditional paper-and-pencil-based exam.

Learnosity's Data API supports this use case by allowing you to author an Activity, export it for printing in the desired form by you or a third-party, import the students’ responses, and then score and analyze the data as if the exam had been run by the student in a digital form.

Templates for export/import of sessions and responses

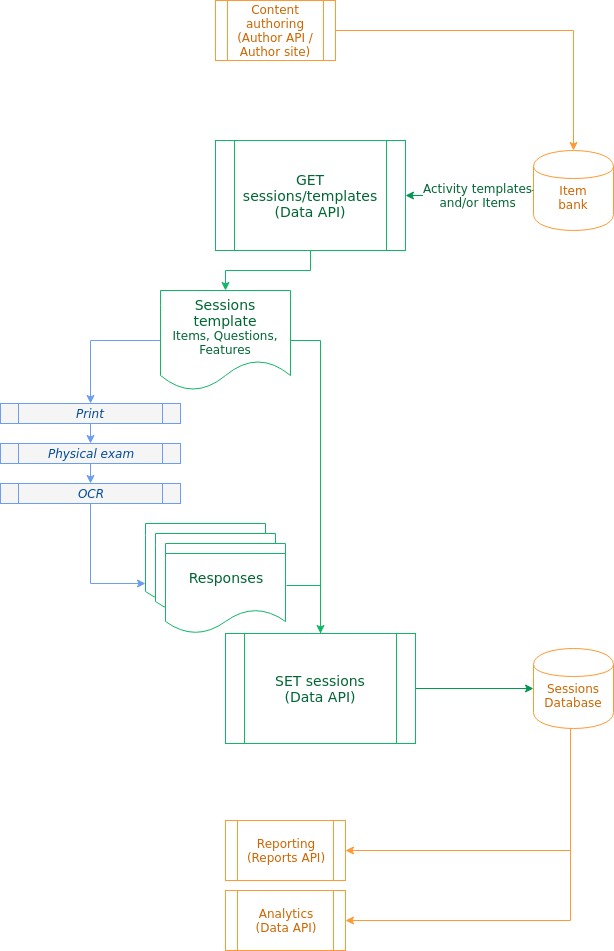

The Data API provides two endpoints, allowing you to export a session template based on Item bank content, and import the responses associated to a template to create normal student sessions in the Learnosity platform.

The following diagram illustrate the steps needed to support a paper-based assessment with the Learnosity stack.

Generating session templates

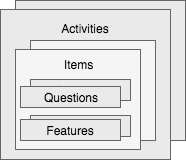

Session templates are JSON documents representing an Activity. They comprise one or more Items, each made of one or more Questions or Features. Information sufficient to print the text of each Item, as well as validation information for later scoring are included in the session template.

You can create a session template by sending a POST request to the /sessions/templates endpoint in Data API, with action="get". A simple example is as follows.

{

"action": "get",

"request": {

"activity_template_id": "activity_1",

"items": [

"item_1",

"item_2"

]

},

"security": {

"consumer_key": "...",

"domain": "...",

"signature": "...",

"timestamp": "YYYYmmdd-HHMM"

}

}

This example shows a request to create a session template based on an Activity template, but also includes two Items. Either an activity_template_id or an items array is required. If both are provided, the list of Items will supersede those in the Activity template. If only an Activity template is provided, and it does not contain any Items, an error will occur. This can be corrected by adding Items to the Activity, or in the session template request, as shown.

The security property is built following the security pattern for Data API.

The request affords the same flexibility in fetching and configuring Items as the Items API, in terms of using multiple Item banks (organisation_ids) or pools, and configuring scoring methods or subscore groups.

Printing and scanning

The template obtained in the previous section serves two purposes. One is to inform the response import, discussed in the next section. The other purpose is to provide enough information so you can prepare an examination paper and print it out.

Printing assessment papers

The /sessions/templates endpoint will return a JSON document containing the session template. The template contains a data.items array which contains the contents of all the Items and their Questions or Features. While a full template is too long to include here, only a subset of the properties of Items are relevant for the preparation of a printable exam paper. The rest is used during the import for validation of the responses, and doesn't need to be touched.

Every Item contains a questions array of Questions of various types. The features array may also be relevant if the Item relies on non-answerable Features such as text passages.

{

"reference": "item_1",

"content": "...",

"metadata": "..."

"workflow": [...],

"feature_ids": [...],

"questions": [...],

"features": [...],

"question_ids": [...]

}

For details on the relevant data for printing each Question (e.g., stimulus or distractors), refer to the Appendix for details on Question and Feature formats).

Digitizing responses

How to best digitize students' responses depends on your use case. Some providers (such as Paperscorer) can take care of both printing and scanning of the papers in a fully automated way. It is also possible, though not advisable, to do this process manually.

Regardless of the chosen digitization method, we recommend outputting the responses immediately in a format suitable for importing into Learnosity. The format of sessions and specific Question types can be found in the Appendix.

Importing responses

After a student has completed a paper-based assessment, the digitized results can be uploaded into the Learnosity platform by sending a POST request to the /sessions endpoint.

The request must use action="set" and data_format="from_template", and mainly comprises of a template object and data array.

{

"action": "set",

"request": {

"data_format": "from_template",

"template": {...},

"data": [...]

},

"security": {...}

}

The template property should contain the data returned by the /sessions/template request, unaltered. The information this template contains will be used for validation and scoring purposes. The data array contains one or more objects, each describing one student's session and its responses, as generated when digitizing the submitted papers in the previous step.

Conclusion

You can use the whole set of Learnosity authoring and analytics tools even when you need to administer the assessment in a paper-and-pencil form. The Data API provides two endpoints allowing you to export activities templates and import responses. You can then use these to create any sort of physical assessment paper that best fits your requirements.

Appendix: Template and sessions/responses formats

If you decide to prepare printable documents, or scan responses yourself, you will need to understand the format of the Questions in the template, and know how to represent the sessions and their responses. This appendix describes those formats for you or your print/scan partner.

Note that the formats described here are only valid for the export and import of sessions from templates. The formats are simpler than the rest of the Learnosity APIs, and are not compatible.

Template Questions and Features formats

The template exported from the /sessions/templates endpoint contains the representation of the Activity's Items and Questions. This is where information relevant to be printed (e.g., stimulus or distractors) can be found.

While Questions of any type could be exported printing, this document only details the most common Question types.

- Multiple choice (

mcq) - Fill in the blanks (

clozetext) - Short text (

shorttext) / Plain text essay (plaintext) / Long text essay (longtext)

For more response formats, you can refer to the full reference for all supported Question types.

In addition, we discuss passage (sharedpassage) Features.

Both Questions and Feature objects have a data property containing the relevant information.

{

"type": "mcq",

"widget_type": "response",

"reference": "...",

"data": {

"stimulus": "...",

"options": [...],

"type": "mcq",

"validation": {...},

"ui_style": {...}

},

"metadata": {...}

}

Let's discuss the content of the data property.

Common properties of all Question types

Every Question has a stimulus property which generally contains the instructions for the question.

{

"stimulus": "<p>[This is the stem.]</p>",

"type": "shorttext",

"validation": {...}

"metadata": {...},

"id": "..."

}

The id property is noteworthy: when importing student sessions, this identifier will be repeated alongside the matching response.

Multiple choice questions

Multiple choice Questions have a set of options, listing the distractors. Each option has a label, used for display, and value, used when coding the response of a student who has selected this answer.

{

"options": [

{ "label": "[Choice A]", "value": "0" },

{ "label": "[Choice B]", "value": "1" },

{ "label": "[Choice C]", "value": "2" },

{ "label": "[Choice D]", "value": "3" }

],

"stimulus": "<p>[This is the stem.]</p>",

"type": "mcq",

"ui_style": {...},

"validation": {...},

"metadata": {...},

"id": "..."

}

Fill in the blank Questions

Fill in the blank Questions share the same properties as multiple choice Questions, but they additionally have atemplate property containing the text with missing words (identified by the {{response}} keyword) that needs to be completed by the learner.

{

"stimulus": "<p>[This is the stem.]</p>",

"template": "Risus {{response}}, et tincidunt turpis facilisis.",

"type": "clozetext",

"validation": {...},

"metadata": {...},

"id": "..."

}

Shared passages features

Shared passages are longer text which do not include a Question for learners to answer, but provide background context for other Items and Questions. The most important properties are heading and content.

{

"type": "sharedpassage",

"heading": "Case Study",

"content": "<p>Lorem ipsum dolor sit amet...</p>"

}

Session and responses formats

When scanning student-submitted papers, it is recommended to store output sessions and responses in the following format, which is used in the data property of the request to the /sessions endpoint.

A session comprises various identifiers, and the list of responses.

{

"user_id": "...",

"activity_id": "...",

"session_id": "<UUIDv4>",

"responses": [...]

}

All three IDs will be stored in the Learnosity platform as identifiers for the session. user_id is used to link multiple tests to a given user, activity_id can be used to link multiple Activities across users, and session_id must be globally unique. We recommend using UUIDv4 for all of them, but you can choose to use another sort of identifier, depending on what makes most sense to meaningfully link the data in the rest of your platform. Only session_id is required to be a UUIDv4.

The responses array must contain a list of response objects, whose format depends on the Question type, as described in the following. For reference, a mapping of Question names to their code is available.

Each response object must reference the id of one of the Questions from the template via its question_idproperty. They also have a type, which can either be "string" or "array", depending on the representation of the response.

{

"question_id": "...",

"type": "...",

...

}

Multiple choice (mcq) and Fill in the blanks (clozetext)

Multiple choice and fill-in-the-blanks Questions allow the students to provide one or more responses. The type of response must be array, and its value an array of one or more responses.

{

"question_id": "...",

"type": "array",

"value": [

"0", "1"

]

}

For Multiple choice Questions, the elements in the value array must match the value of one of the distractors in the options property of the Question (from the template).

For Fill in the blanks Questions, the elements are simply strings of the student's literal answer.

Short text (shorttext)

Short text Questions expect a response in a few words. The type of the response must be string, and its value is the string of the student's literal answer, URL-encoded, with + instead of spaces. An additional characterCount property, which indicates the number of characters in the string, must also be provided.

{

"question_id": "...",

"type": "string",

"value": "makka+pakka",

"characterCount": 11

}

Plain text essay (plaintext) and Long text essay (longtext)

Essay Questions expect longer textual responses. The type of the response must be string, and its value the string of the student's literal answer, URL-encoded, with + instead of spaces. An additional wordCount property, which indicates the number of words in the string, must also be provided.

{

"question_id": "...",

"type": "string",

"value": "upsy+daisy",

"wordCount": 2

}